UAE Security Chiefs Flag Data Theft Risks in AI Photo Apps

UAE cybersecurity experts are sounding the alarm about AI photo apps after cloud-based cyberattacks jumped by 50%. The most important concern centers on apps that create trendy Studio Ghibli-style avatars. These photo transformation tools might look harmless, but they put users’ privacy at risk and could lead to data breaches and identity fraud.

UAE’s cybersecurity landscape reveals several worrying issues. These apps collect biometric data that users can’t change if someone steals it. The photos people upload also hide metadata that shows sensitive details about their devices and location. Help AG’s Chief Technology Officer Nicolai Solling points out that people get caught up in the excitement of artistic transformations. They often forget what it all means when they share personal images with AI platforms.

UAE Cybersecurity Experts Reveal How AI Photo Apps Extract Personal Data

Image Source: Securiti.ai

AI artistic transformations rely on complex data extraction mechanisms that need a closer look. UAE cybersecurity experts discovered that simple photo filters actually work as sophisticated data collection tools that could pose security risks.

The Technical Anatomy of AI Photo Filters

Quick Heal Technologies CEO Vishal Salvi explains how modern AI photo apps use neural style transfer (NST) algorithms to separate content from artistic styles in uploaded photos. These algorithms create detailed biometric profiles by analyzing facial features while they blend user images with reference artwork. The tools also extract feature vectors from images and compare them against existing databases to improve recognition capabilities.

A simple artistic transformation actually involves a detailed analysis of facial characteristics that users can’t change like passwords if someone steals them. The technology can recognize even the tiniest details in handwritten content.

What Happens Behind the Scenes When You Upload a Photo

Users who submit photos to AI applications share more than just visible image data. Research shows all but one of these popular AI photo apps use uploaded data to train their AI tools. About 75% of apps make users give up rights to their personal images for promotional activities.

Photos contain hidden metadata like location coordinates, timestamps, and device information beyond facial data. This invisible layer puts users at risk of location-based threats even when the visible content looks safe. Many platforms keep unclear policies about what “deletion” really means, despite claiming they delete data after processing.

About 25% of these applications keep facial data after creating images, and 20% don’t let users delete their data. Security becomes an even bigger concern as one in five apps fails to encrypt data during transmission.

Why Studio Ghibli Filters Triggered Alarm Bells

UAE cybersecurity professionals worried about the viral Studio Ghibli-style art transformation trend because it spread widely without much transparency. Help AG’s Chief Technology Officer Nicolai Solling warned that these avatars capture detailed facial features that serve as irreplaceable biometric data.

Security experts found these platforms vulnerable to model inversion attacks where bad actors might rebuild original photos from stylized Ghibli images. Users often grant access quickly without thinking about the risks of sharing sensitive data because these platforms push for fast participation.

People get caught up in the excitement of artistic transformations and miss the privacy issues underneath. Most platforms hide vital information about data usage in long terms of service that nobody reads fully. High-quality avatars might help criminals bypass security systems as facial recognition technology gets better.

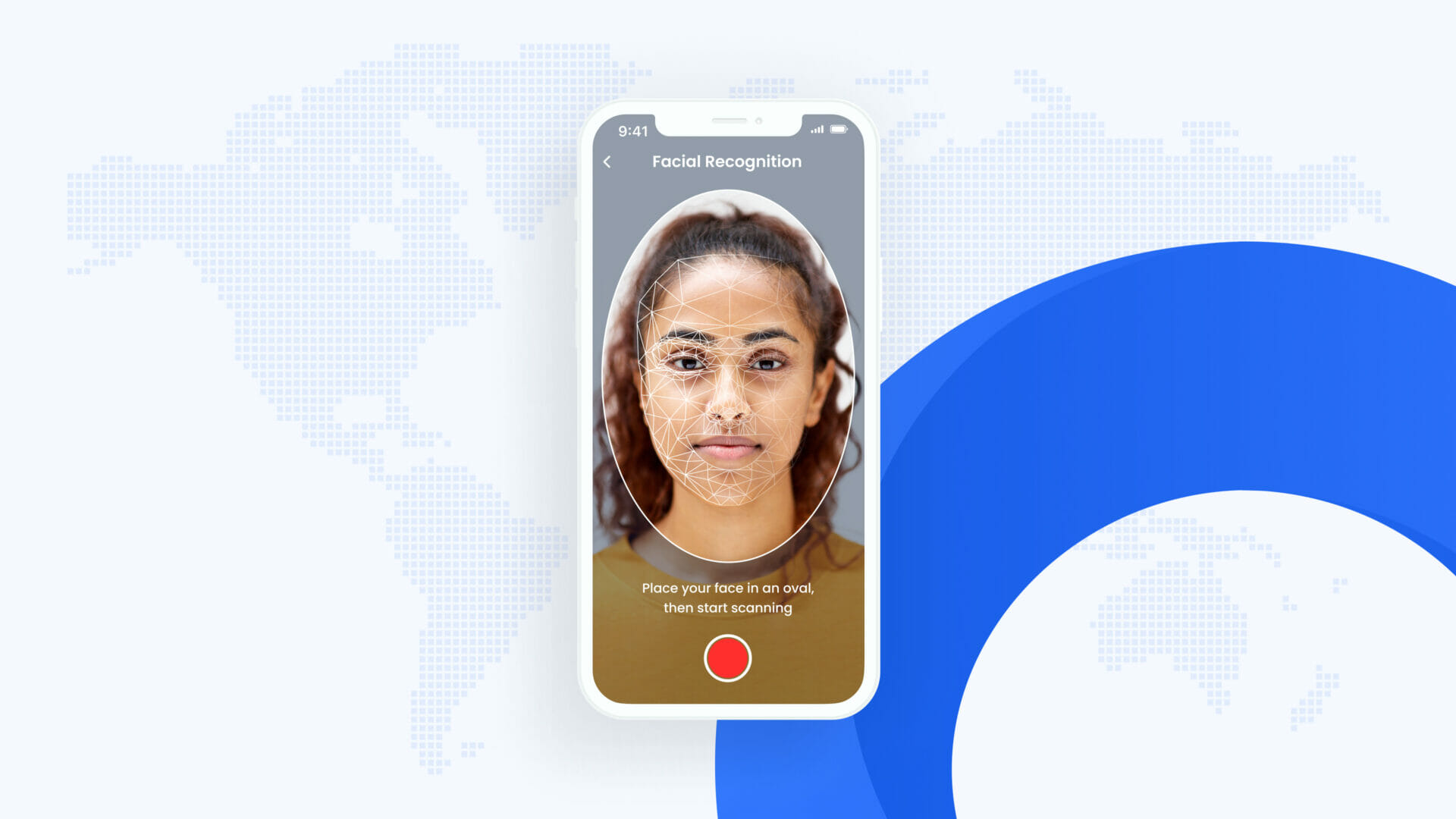

Facial Recognition Creates Permanent Digital Fingerprints in UAE

Image Source: uqudo

Facial recognition technology creates unique security challenges for UAE residents because of its permanent nature. Biometric data creates an unchangeable digital fingerprint that is different from traditional security measures. UAE cybersecurity experts now analyze potential risks from photo applications that seem harmless.

Biometric Data Cannot Be Changed Like Passwords

Your facial biometric data stays permanently linked to your identity, unlike passwords or credit card details that you can reset. You can’t change your facial features if someone breaches your data, which creates serious security risks. The UAE government understands these implications but continues to expand its biometric identification systems. The government combines facial recognition with iris scans to improve identification accuracy from 99.5% to potentially 99.95%.

Banks in the UAE now use facial recognition systems to verify customers. To cite an instance, ADCB’s FacePass system lets users verify transactions without one-time passwords, even from abroad. All the same, these convenient features come with built-in risks—victims can’t change their biometric identifiers after a data breach.

How Your Face Becomes Part of AI Training Datasets

Facial recognition systems need large training datasets to work. AI applications process huge volumes of images, often without asking permission from people whose data they use. This creates multiple weak points where someone might expose or misuse sensitive personal information.

UAE’s AI developers use these training datasets to improve recognition capabilities. Local researchers have built systems that learn from 2.6 million images of 2,622 faces. These systems learn to spot faces from different angles, lighting conditions, and expressions.

The biggest concern lies in how facial data becomes a permanent part of AI models that no one can remove. A UAE cybersecurity expert explains it simply: “One cannot untrain generative AI. Once the system has been trained on something, there’s no way to take back that information”. The global facial recognition market should grow from AED 18.40 billion in 2023 to AED 46.52 billion by 2030. These numbers show both economic value and growing security risks of this technology.

Hidden Metadata Exposes UAE Residents to Location-Based Threats

Digital photos carry hidden personal information that you can’t see. UAE cybersecurity authorities point to metadata as a key security risk that leaves residents exposed to location-based threats through regular photo sharing.

The Invisible Information in Every Photo You Share

UAE residents’ devices automatically add metadata to their photos – hidden information that follows these images across platforms. This data has GPS coordinates, timestamps, device details, and camera serial numbers. Apple points out that these coordinates let users search photos by location or view them in geographic collections.

Your photos with location services turned on create a permanent digital trail that shows your daily habits. This seemingly harmless data lets bad actors track your movements and spot valuable targets. Each vacation photo you share might tell criminals when your home is empty, opening doors to both physical and digital attacks.

Real Cases of Metadata Exploitation in the Emirates

A significant UAE case saw hackers break into a bank and demand ransom to keep customer details and bank statements private. Cybersecurity experts have tracked how attackers target UAE residents and use metadata to coordinate sophisticated phishing attacks.

The UAE Penal Code (Article 378) and Cybercrime Law (Article 21) make it illegal to publish content with private information. Many residents don’t know that sharing high-quality images lets criminals extract enough biometric data to create fake digital identities or e-SIMs.

Why Public Wi-Fi Makes the Problem Worse

Abu Dhabi Digital Authority warned residents about public Wi-Fi networks that make metadata vulnerabilities worse. Hackers set up fake networks with names that look like real businesses. Just connecting to these networks exposes your device information and data.

About 95% of UAE consumers take risks on public Wi-Fi, putting their email and banking details in danger. Criminals watch these networks closely, and two-thirds of residents can’t wait more than a few minutes before connecting to available Wi-Fi.

UAE cybersecurity experts say you should assume someone monitors all your data when using public networks. This risk grows when you share photos loaded with rich metadata.

UAE Cybersecurity Council Implements New Protection Protocols

Image Source: CyberPro Magazine

The UAE has created detailed cybersecurity frameworks to curb dangers from emerging technologies that include AI photo applications.

Multi-Factor Authentication Requirements

UAE now requires multi-layered verification systems for applications with sensitive data. Financial institutions need multi-factor authentication that has biometric verification for high-risk activities like changes to personal information and high-value fund transfers. Organizations must use risk-based authentication measures that adjust security requirements based on transaction sensitivity instead of relying only on passwords.

Regulatory guidelines require biometric authentication systems to adopt security protocols that ensure personal information’s confidentiality and integrity. Organizations that deploy multi-factor authentication at login need to think about phishing-resistant authenticators where at least one factor uses public key encryption.

Data Encryption Standards for App Developers

App developers in the UAE now face strict encryption requirements after extensive data breaches. UAE’s Data Protection Law (DPL) requires controllers to notify the UAE Data Office and affected individuals immediately if a data breach compromises privacy or security. Controllers and processors must follow principles like GDPR, which include data minimization and transparency.

Payment service providers must implement resilient policies to protect payment data and personal information. App developers need to ensure their mobile apps have no hardcoded API keys and keep all data encrypted at rest or in transit.

How to Report Suspicious AI Applications

UAE offers multiple channels to report suspicious AI applications:

- The eCrimes platform launched by the Ministry of Interior (available on MoI UAE app)

- The eCrime website operated by Dubai Police

- Aman service by Abu Dhabi Police for confidential reporting

- The “My Safe Society” app from UAE’s federal Public Prosecution

On top of that, residents can report concerns at any police station or call 999 if they need urgent help. Dubai Police’s Cyber Crime Department encourages people to report suspected data compromise through AI platforms.

UAE’s Telecommunications and Digital Government Regulatory Authority policy allows people to report online content used for impersonation, fraud, phishing, or privacy invasion to licensed internet service providers who can remove it.

UAE cybersecurity experts warn that AI photo apps pose substantial privacy risks through their data collection systems. These apps collect irreplaceable biometric data and hidden metadata that create permanent digital fingerprints. Users become vulnerable to identity theft and location-based threats.

Cloud-based cyberattacks show how digital threats have become more sophisticated. UAE authorities have responded by implementing strong protection protocols. App developers must now follow strict multi-factor authentication requirements and detailed data encryption standards.

People should be careful when they share photos through AI platforms. They can reduce their risk of data breaches by turning off location services before taking photos and checking privacy policies. UAE residents can report suspicious apps through official channels like the eCrimes platform or Dubai Police’s Cyber Crime Department.

Permanent biometric data collection combined with metadata exploitation creates new challenges for digital privacy. The UAE’s cybersecurity framework adapts to new threats and protects its residents’ digital identities.

Understanding these risks enables users to make better decisions about their online presence. The UAE’s proactive cybersecurity approach and citizen awareness build a better defense against digital threats in our connected world.